Customer feedback plays a crucial role in shaping any service-oriented company, and Uber is no exception. With thousands of riders using the service daily, analyzing customer sentiment can provide invaluable insights into satisfaction levels, areas for improvement, and overall brand perception. In this particular project, I explore a comprehensive approach to sentiment analysis of 2024 Uber customer reviews, using advanced Natural Language Processing (NLP) techniques and deep learning models.

The first step in any sentiment analysis project is data preparation. Our dataset contains thousands of Uber customer reviews from 2024, including details such as user ratings, comments, and

timestamps. However, raw data often contains noise, requiring thorough preprocessing before analysis. To ensure accuracy, data is processed the following steps:

Data Cleaning : I remove columns such as user names, images, and timestamps that didn’t contribute to sentiment analysis. I also filtered out incomplete or empty reviews.

Text Preprocessing: I standardized the text by converting it to lowercase, stripping punctuation, and removing HTML tags. Spelling corrections and emoji replacement

(e.g., converting 😃 to “happy face”) ensured that each review was clean.

Additionally, lemmatization reduced words to their base forms (so “running” and “run” were treated the same), and stop words like “is,” “the,” and “and” were removed.

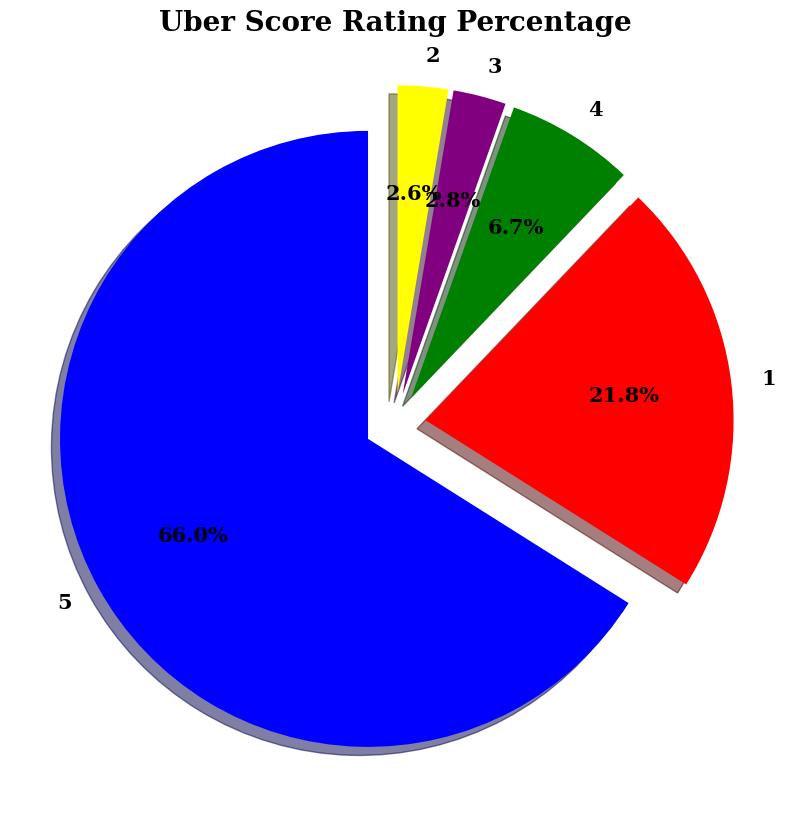

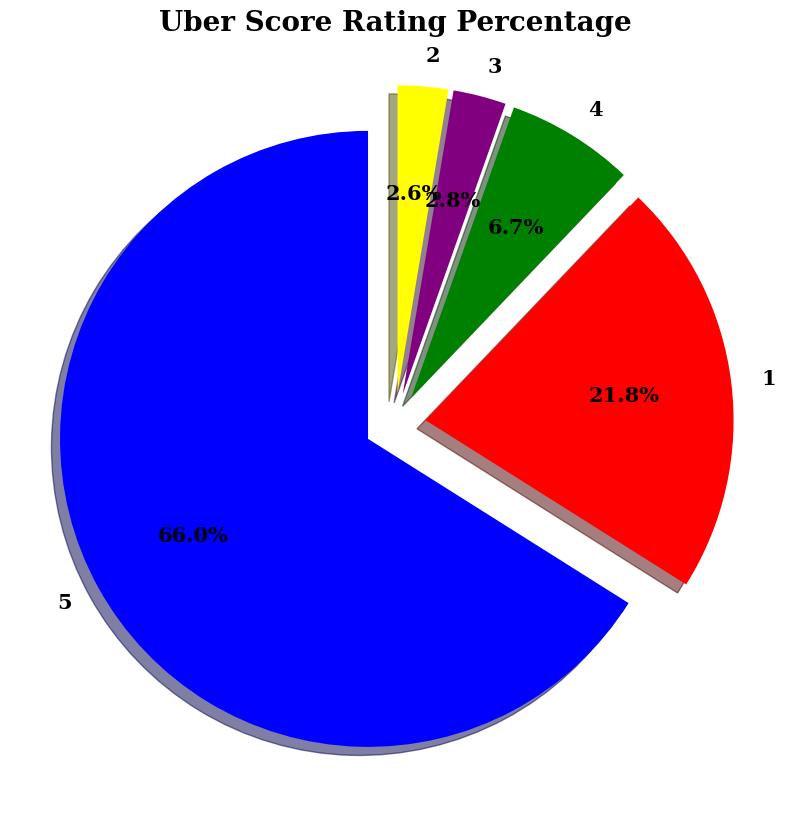

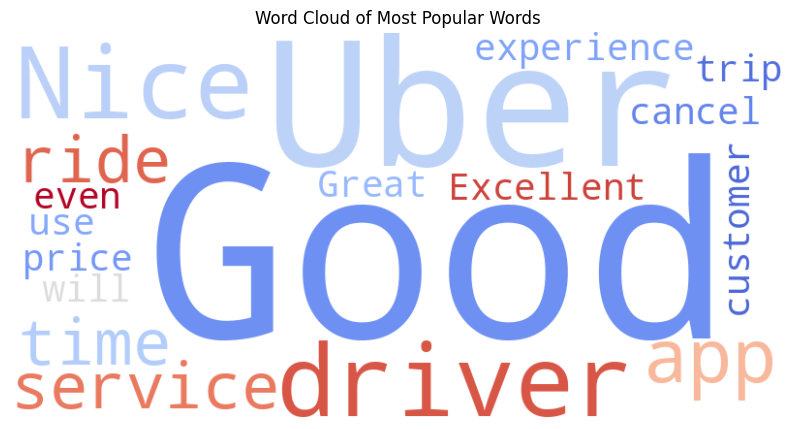

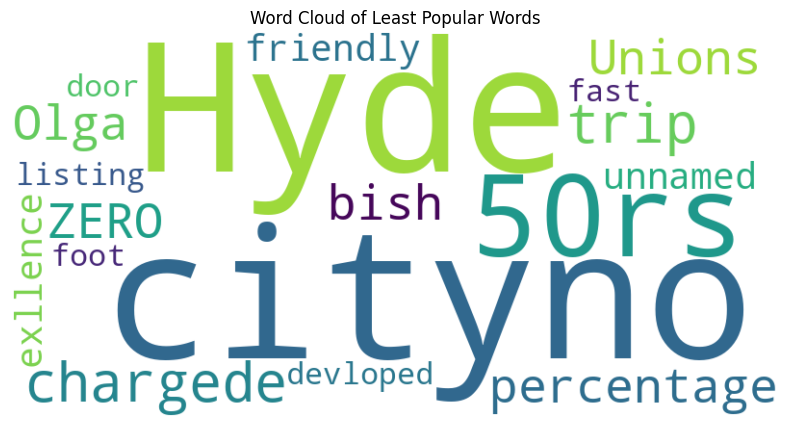

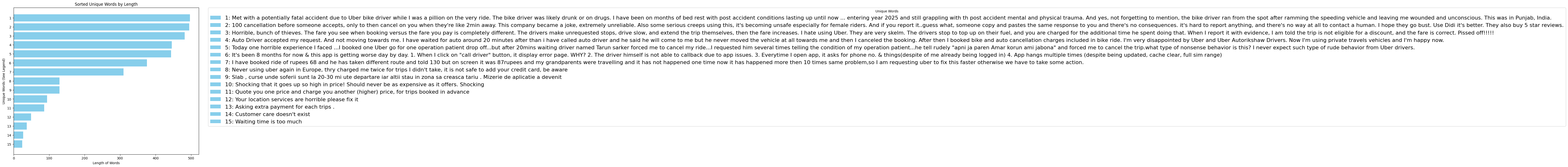

A word cloud generated from the reviews showed that positive adjectives like “good,” “great,” and “nice” were among the most frequent, suggesting that the bulk of reviews were positive. Meanwhile, words that appeared less often were either out of context or seemed to reference very specific entities, like driver's name or location.

To extract meaningful sentiment trends, I experimented with three state-of-the-art models:

Each model was tested on the dataset, and their results were compared based on precision, recall, and overall accuracy.

Our analysis revealed distinct patterns among different customer groups. Positive reviews frequently mentioned “friendly drivers,” “comfortable rides,” and “quick pickups.” Conversely, negative reviews raised concerns about “long wait times,” “poor vehicle quality,” and “rude driver interactions.” High dissatisfaction around driver conduct or slow service points to the need for refined driver guidelines and better route optimization. Errors in pick-up or destination coordinates disrupt journeys and cause delays. Enhancing GPS technology and allowing users to confirm locations could lessen these issues. Frequent cancellations result in irregular wait times and undermine trust. Stricter cancellation policies and enhanced driver training may provide relief. Multiple charges or unexpected fees, often tied to driver lateness, sap customer confidence. Transparent billing practices and clear communication may restore trust.

Based on negative feedback trends, Uber could implement the following solutions: